Retrieval Augmentation Generation (RAG) is a sophisticated approach in the field of artificial intelligence that blends the capabilities of retrieval-based and generative models to enhance machine understanding and response generation. This technology represents a significant advancement in AI, particularly in natural language processing (NLP). In this article, we’ll delve into the workings of RAG, its applications, and the metrics used to evaluate its performance.

How RAG Works

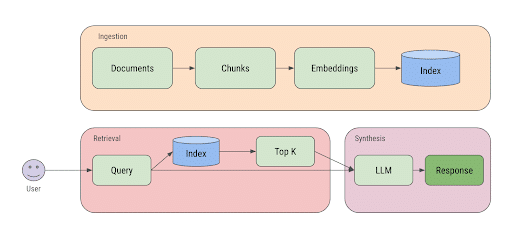

RAG operates by integrating two principal components: a retrieval system and a generative model. The process starts with the retrieval system, which is tasked with sourcing relevant information from a large dataset or knowledge base. This information typically consists of documents, passages, or database entries that are pertinent to the input query.

Once the relevant information is retrieved, it is passed to the generative model. This model, often based on transformer architectures like GPT, uses the retrieved data to generate a coherent and contextually appropriate response. The generative model effectively synthesizes the information, incorporating the retrieved data into its output to provide more accurate, detailed, and contextually relevant answers.

Key Components of RAG

Ingestion: Process from which the information is transformed into a searchable format for the retrieval system. This could be based on Vector Databases, ElasticSearch or a Data Warehouse.

Retrieval System: This could be based on traditional information retrieval techniques (SQL, BM25, ElasticSearch) or more advanced neural network models that encode and match top K text based on semantic similarity, such as embeddings.

Synthesis: Typically a large-scale language model trained on a diverse corpus of text. The model learns to predict text sequences, thereby generating plausible stretches of text based on the inputs it receives.

Applications of RAG

RAG is highly versatile, with applications across various domains:

Question Answering Systems: RAG can significantly enhance the performance of QA systems by providing more precise answers that are supported by extracted evidence from relevant documents.

Chatbots and Virtual Assistants: By leveraging RAG, these systems can provide more informative, accurate, and contextually relevant responses, improving user interaction.

Content Recommendation: RAG can be used to generate personalized content recommendations by retrieving user-relevant information and generating suggestions accordingly.

Use Case Scenario

Let’s look at the example of a road trip planning tool. Imagine an app where a user can specify the origin, final destination and time frame for a road trip, and the app would then generate a recommended route and itinerary, including places to visit, where to eat, which detour to take, all with time schedules.

One could start to think that this is something an LLM might be able to solve by itself, but the results from an LLM alone would not be accurate nor consistent, will depend on the date the model was trained, and thus it will not be able to account for current climatic conditions, environmental warnings, promotions, user preferences or anything changing on a daily basis. But a retrieval system is great at trying to query a dataset using all of these restrictions.

Using a retrieval system to plan the route and itinerary, and then let the LLM enrich the final output for the user, further displaying it as a cohesive promotional opportunity, is a great combo for solving this scenario.

Metrics for Evaluating RAG

Evaluating a RAG system involves a variety of metrics that measure both the accuracy of the retrieved information and the quality of the generated responses:

BLEU Score: A common metric for evaluating the quality of text that the generative model produces by comparing it to a reference text.

ROUGE Score: Assesses the quality of a summary by counting the overlapping units such as n-grams, word sequences, and word pairs between the generated text and reference texts.

Context Relevance: To verify the quality of our retrieval, we want to make sure that each chunk of context is relevant to the input. This context will be used by the LLM to form an answer, so any misinformation in the context could be translated into a hallucination.

Groundedness: After LLM generation, in order to verify the groundedness of our application, we should split the response into statements and independently search for evidence that supports each within the retrieved context.

Answer Relevance: our response needs to answer the original question. We verify this by evaluating the final answer’s relevance to the user input.

Human Evaluation: Subjective analysis where human judges assess the relevance, coherence, informativeness, and fluency of the responses.

Some technologies behind RAGs

LlamaIndex: a simple, flexible data framework for connecting custom data sources to large language models, it includes tools for making RAG development much faster and easier.

TruLens: a software tool that helps you to objectively measure the quality and effectiveness of your LLM-based applications using feedback functions.

Chroma: an open-source vector database. A great fit to store your data in ways compatible with RAG systems.

ElasticSearch: Elastic enables everyone to find the answers that matter. From all data. In real time. At scale.

Conclusion

Retrieval Augmentation Generation represents a powerful blend of retrieval-based and generative technologies, offering a significant improvement in AI’s ability to process and generate human-like text. As these systems continue to evolve, their impact is expected to grow, leading to more sophisticated and useful AI applications across various sectors. Whether enhancing the capabilities of chatbots, aiding in complex research tasks, or improving user interaction with intelligent systems, RAG stands as a pivotal innovation in the field of artificial intelligence.

Discover how these technical insights are being translated into strategic digital transformation initiatives across various sectors in Gangverk’s detailed discussion on the practical applications of RAG.